virtio简介

前言

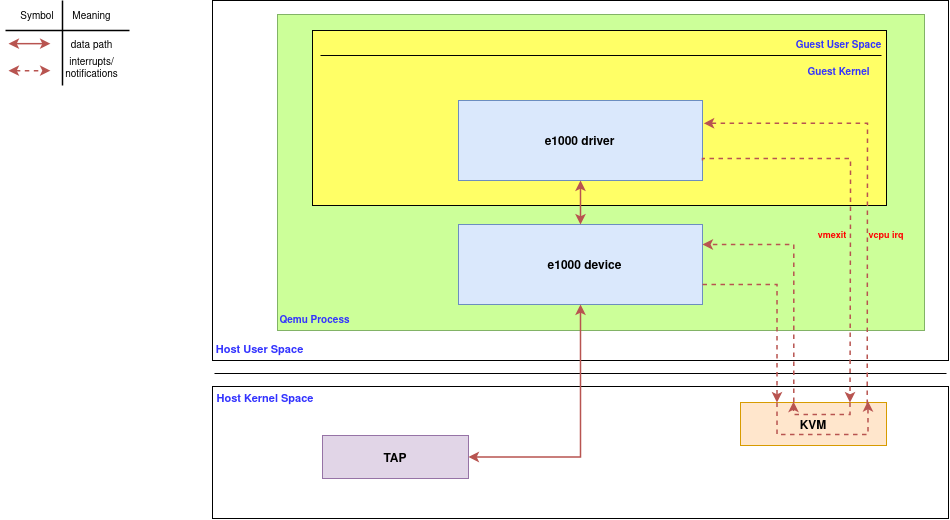

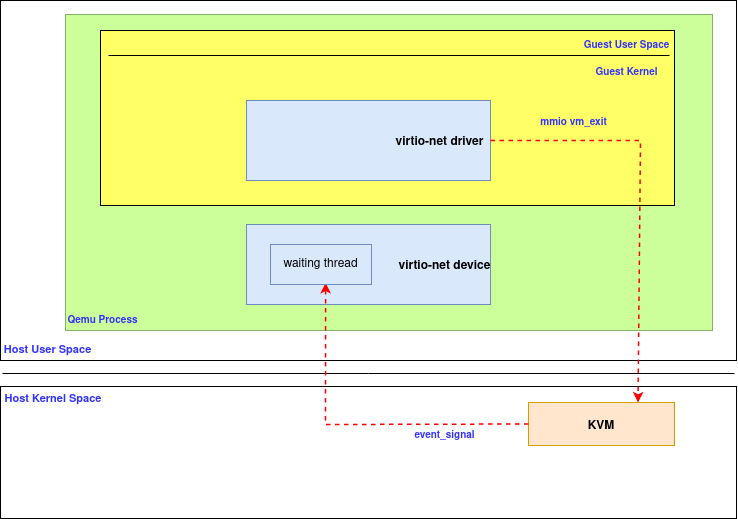

在传统的设备模拟中,Qemu仿真完整的物理设备,每次guest的I/O操作都需要vm_exit和vcpu irq,如下所示

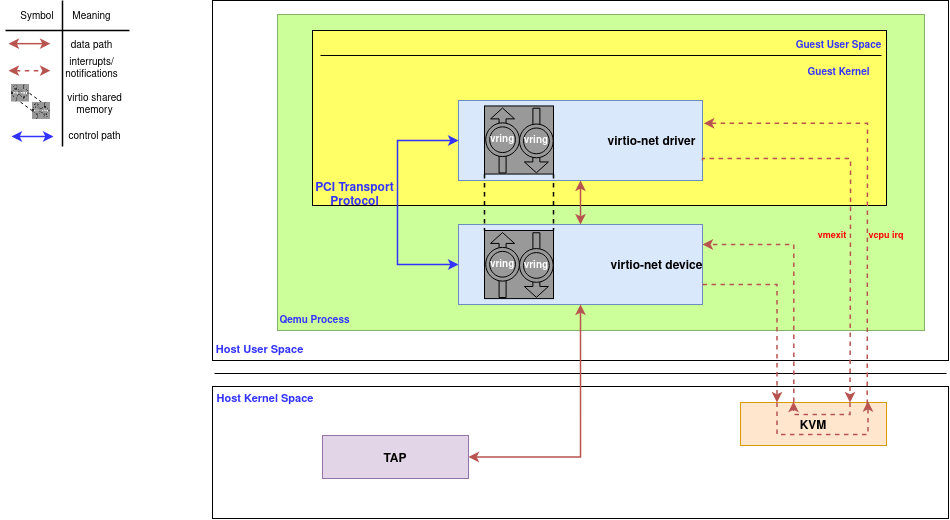

为了提高虚拟机的I/O效率,virtio协议被制定出来。在该方案中,guest能够感知到自己处于虚拟化环境,并且会加载相应的virtio总线驱动和virtio设备驱动与virtio设备进行通信,避免了guest的每次I/O操作都需要vm_exit和vcpu irq(仍然需要vm_exit和vcpu irq,但是将传统模拟中极多的vm_exit转换为virtio shared memory通信),如下所示

virtio协议

根据virtio标准2.中的内容,virtio设备往往包含如下组件

virtqueue

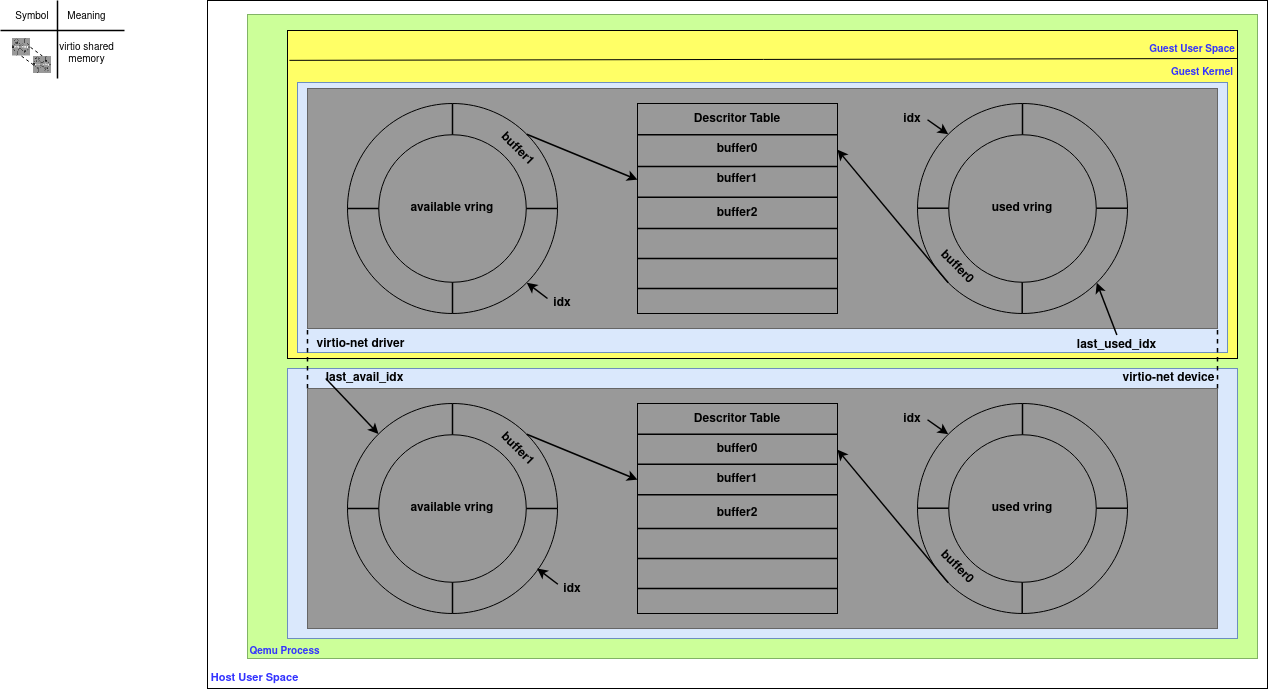

virtio设备和guest批量数据传输的机制被称为virtqueue,驱动和virtio设备共享virtqueue内存,整体如下所示(这里仅仅介绍split virtqueues,不介绍packed virtqueues)

当驱动希望将请求提供给设备时,它会从descritor table中选择一个空闲的buffer并添加到available vring,并选择性地触发一个事件,通过发送通知(在后续notifications小节中介绍)给virtio设备,告知buffer已经准备好

设备在处理请求后,会将available vring中已使用的buffer添加到used vring中,并选择性地触发一个事件,发送通知给guest,表示该buffer已被使用过

根据virtio标准2.6.,virtqueue由descriptor table、available ring和used ring构成

descriptor table

descriptor table指的是驱动为设备准备的buffer,其中每个元素形式如virtio标准2.7.5.中定义1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17struct virtq_desc {

/* Address (guest-physical). */

le64 addr;

/* Length. */

le32 len;

/* This marks a buffer as continuing via the next field. */

/* This marks a buffer as device write-only (otherwise device read-only). */

/* This means the buffer contains a list of buffer descriptors. */

/* The flags as indicated above. */

le16 flags;

/* Next field if flags & NEXT */

le16 next;

};

其中,每个描述符描述一个buffer,addr是guest的物理地址。描述符可以通过next进行链式连接,其中每个描述符描述的buffer要么是设备只读guest只写的,要么是设备只写guest只读的(但无论那种其描述符都是设备只读的),但一个描述符链可以同时包含两种buffer

buffer的具体内容取决于设备类型,最常见的做法是包含一个设备只读头部表明数据类型,并在其后添加一个设备只写尾部以便设备写入

available ring

驱动使用available ring将可用buffer提供给设备,其形式如virtio标准2.7.6.所示1

2

3

4

5

6

7struct virtq_avail {

le16 flags;

le16 idx;

le16 ring[ /* Queue Size */ ];

le16 used_event; /* Only if VIRTIO_F_EVENT_IDX */

};

其中,ring每个元素指向descriptor table中的描述符链,其仅由驱动写入,由设备读取

idx表示驱动将下一个ring元素的位置,仅由驱动维护。除此之外,设备会维护一个last_avail_idx,表示设备使用过的最后一个ring元素的位置,即(last_avail_idx, idx)时所有可用的ring元素

used ring

类似的,设备使用used ring将已用buffer提供给设备,其形式如virtio标准2.7.8.所示1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18struct virtq_used {

le16 flags;

le16 idx;

struct virtq_used_elem ring[ /* Queue Size */];

le16 avail_event; /* Only if VIRTIO_F_EVENT_IDX */

};

/* le32 is used here for ids for padding reasons. */

struct virtq_used_elem {

/* Index of start of used descriptor chain. */

le32 id;

/*

* The number of bytes written into the device writable portion of

* the buffer described by the descriptor chain.

*/

le32 len;

};

其中,ring每个元素包含指向descriptor table中描述符链的id和设备实际写入的字节数len,其仅由设备写入,由驱动读取

idx表示设备将下一个ring元素的位置,仅由设备维护。除此之外,驱动会维护一个last_used_idx,表示驱动使用过的最后一个ring元素的位置,即(last_used_idx, idx)时所有可用的ring元素

device configuration space

设备配置空间通常用于那些很少更改或在初始化时设定的参数。不同于PCI设备的配置空间,其是设备相关的,即不同类型的设备有不同的设备配置空间,如virtio-net设备的设备配置空间如virtio标准5.1.4.所示而virtio-blk设备的设备配置空间如virtio标准5.2.4.所示。

notifications

驱动和virtio设备通过notifications来向对方表明有信息需要传达,根据virtio标准2.3.可知,共有三种:

- 设备变更通知

- 可用buffer通知

- 已用buffer通知

这些通知在不同的设备接口下有不同的表现形式

设备变更通知

设备变更通知是由设备发送给guest,表示前面介绍的设备配置空间发生了更改。

一般是Qemu利用硬件机制注入设置改变MSIx中断

已用buffer通知

类似的,已用buffer通知也是由设备发送给guest,表示前面介绍的used vring上更新了新的已用buffer。

一般是Qemu注入对应的MSIx中断

可用buffer通知

可用buffer通知则是由guest驱动发送给设备的,表示前面介绍的available vring上更新了新的可用buffer。

一般是Qemu设置一段特定的MMIO空间,驱动访问后触发vm_exit退出到kvm后利用ioeventfd机制通知Qemu

device status field

在virtio驱动初始化设备期间,virtio驱动将按照virtio标准3.1.中的步骤进行操作,而device status field提供了对初始化过程中已完成步骤的简单低级指示。可以将其想象成连接到控制台上的交通信号灯,每个信号灯表示一个设备的状态。

其初始化过程如下所示

- 重置设备

- 设置ACKNOWLEDGE状态位,表明guest已经检测到设备

- 设置DRIVER状态位,表明guest知道是用什么驱动与设备交互

- 读取设备feature bits,并将驱动理解的feature bits子集写入设备

- 设置FEATURES_OK状态位。此步骤后,驱动不得接受新的feature bits

- 重新读取device status field,确保FEATURES_OK状态位仍然设置着:否则设备不支持驱动设置的feature bits子集,设备将无法使用

- 执行设备的相关设置,包括配置设备virtqueue等

- 设置DRIVER_OK状态位。表示设备已经被初始化

device status field初始化为0,并在重置过程中由设备重新初始化为0

ACKNOWLEDGE

ACKNOWLEDGE的值是1,该字段被设置表明guest已经检测到设备并将其识别为有效的virtio设备

DRIVER

DRIVER的值是2,该字段被设置表明guest知道如何驱动该设备,即知道使用什么驱动与设备交互

FAILED

FAILED的值是128,该字段被设置表明guest中有错误,已经放弃了该virtio设备。该错误可能是内部错误、驱动错误或者设备操作过程中发生的致命错误

FEATURES_OK

FEATURES_OK的值是8,该字段被设置表明guest的驱动和设备的feature bits协商完成

DRIVER_OK

DRIVER_OK的值是4,该字段被设置表明guest的驱动已经设置好设备,可以正常驱动设备

DEVICE_NEEDS_RESET

DRIVER_OK的值是64,该字段被设置表明virtio设备遇到了无法恢复的错误

feature bits

每个virtio设备都有自己支持的feature bits集合。

在设备初始化期间,驱动会读取这些feature bits集合,并告知设备驱动支持的子集。

这种机制支持前向和后向兼容性:即如果设备通过新增feature bit进行了增强,较旧的驱动程序不会将该新增的feature bit告知给设备;类似的,如果驱动新增了设备不支持的feature bit,则其无法从设备中读取到新增的feature bit

virtio transport

根据virtio标准4.可知,virtio协议可以使用各种不同的总线,因此virtio协议被分为通用部分和总线相关部分。即virtio协议规定都需要有前面小节介绍的5个组件,但驱动和virtio设备如何设置这些组件就是总线相关的。其主要可分为Virtio Over PCI Bus、Virtio Over MMIO和Virtio Over Channel I/O,而virtio-net-pci设备自然属于是Virtio Over PCI BUS。

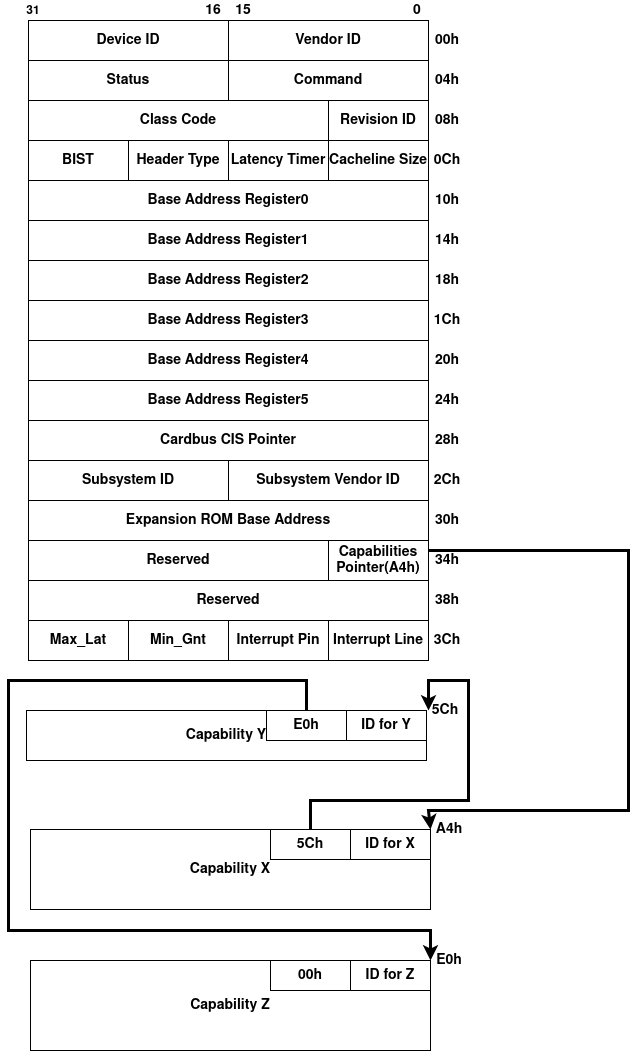

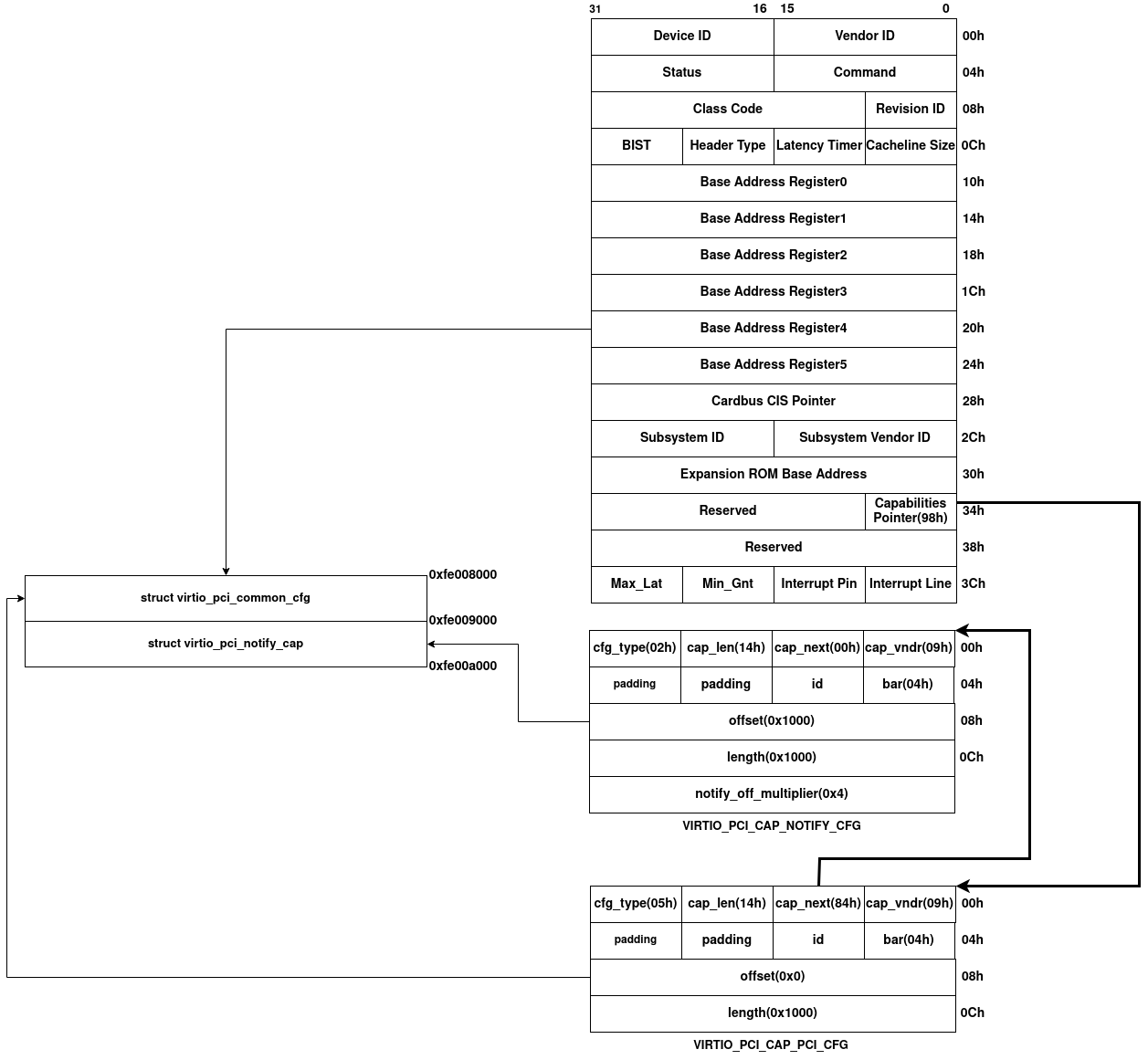

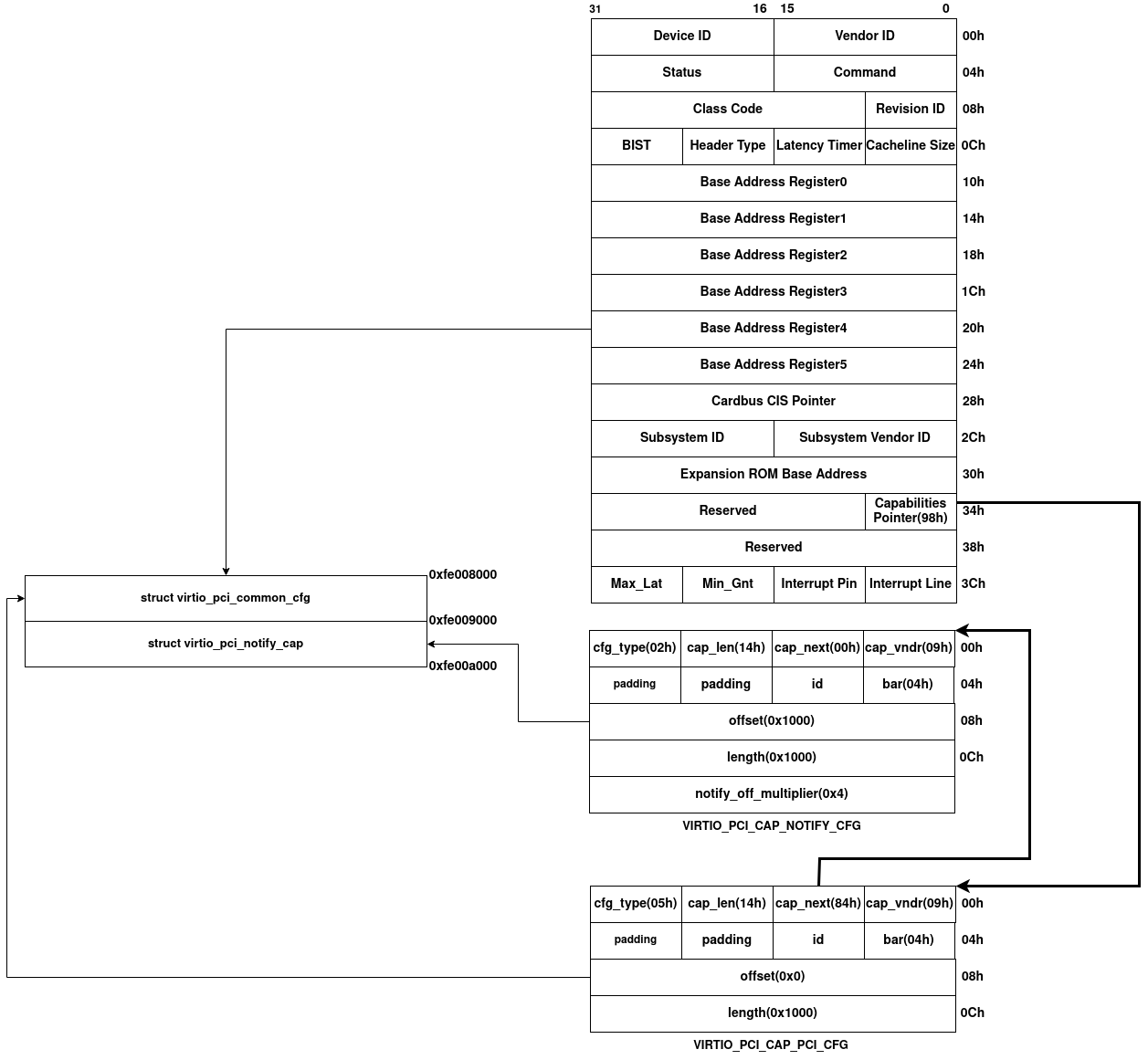

根据virtio标准4.1.3.可知,Virtio Over PCI BUS通过PCI Capabilities来设置virtio协议。根据qemu的PCI设备可知,标准的PCI配置空间如下图所示

其中virtio协议使用这些PCI Capabilities作为virtio结构的配置空间,如下所示

capability

具体的,每个配置空间的位置由下述格式的PCI Capabilities指定1

2

3

4

5

6

7

8

9

10

11struct virtio_pci_cap {

u8 cap_vndr; /* Generic PCI field: PCI_CAP_ID_VNDR */

u8 cap_next; /* Generic PCI field: next ptr. */

u8 cap_len; /* Generic PCI field: capability length */

u8 cfg_type; /* Identifies the structure. */

u8 bar; /* Where to find it. */

u8 id; /* Multiple capabilities of the same type */

u8 padding[2]; /* Pad to full dword. */

le32 offset; /* Offset within bar. */

le32 length; /* Length of the structure, in bytes. */

};

- cap_vndr字段值为0x9,用于标识vendor;cap_next字段指向下一个PCI Capability在PCI设置空间的偏移

- cap_len字段表示当前capability的长度,包括紧跟在struct virtio_pci_cap后的数据

- cfg_type字段表示capability配置空间的类型,包括VIRTIO_PCI_CAP_COMMON_CFG、VIRTIO_PCI_CAP_NOTIFY_CFG、VIRTIO_PCI_CAP_ISR_CFG、VIRTIO_PCI_CAP_DEVICE_CFG、VIRTIO_PCI_CAP_PCI_CFG、VIRTIO_PCI_CAP_SHARED_MEMORY_CFG和VIRTIO_PCI_CAP_VENDOR_CFG

- bar字段指向PCI配置空间的BAR寄存器,将组件配置空间映射到BAR寄存器指向的内存空间或I/O空间

- id字段用于唯一标识capability

- offset字段表示组件配置空间在BAR空间的起始偏移

- length字段表示组件配置空间在BAR空间的长度

其中,此结构根据cfg_type字段还会再数据结构后跟随额外的数据,例如VIRTIO_PCI_CAP_NOTIFY_CFG1

2

3

4struct virtio_pci_notify_cap {

struct virtio_pci_cap cap;

le32 notify_off_multiplier; /* Multiplier for queue_notify_off. */

};

配置空间

根据前面的描述,capability配置空间包含VIRTIO_PCI_CAP_COMMON_CFG、VIRTIO_PCI_CAP_NOTIFY_CFG、VIRTIO_PCI_CAP_ISR_CFG、VIRTIO_PCI_CAP_DEVICE_CFG、VIRTIO_PCI_CAP_PCI_CFG、VIRTIO_PCI_CAP_SHARED_MEMORY_CFG和VIRTIO_PCI_CAP_VENDOR_CFG等。

这里以最重要的VIRTIO_PCI_CAP_COMMON_CFG配置空间为例,根据virtio标准4.1.4.3.,其配置空间结构如下所示1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23struct virtio_pci_common_cfg {

/* About the whole device. */

le32 device_feature_select; /* read-write */

le32 device_feature; /* read-only for driver */

le32 driver_feature_select; /* read-write */

le32 driver_feature; /* read-write */

le16 config_msix_vector; /* read-write */

le16 num_queues; /* read-only for driver */

u8 device_status; /* read-write */

u8 config_generation; /* read-only for driver */

/* About a specific virtqueue. */

le16 queue_select; /* read-write */

le16 queue_size; /* read-write */

le16 queue_msix_vector; /* read-write */

le16 queue_enable; /* read-write */

le16 queue_notify_off; /* read-only for driver */

le64 queue_desc; /* read-write */

le64 queue_driver; /* read-write */

le64 queue_device; /* read-write */

le16 queue_notify_data; /* read-only for driver */

le16 queue_reset; /* read-write */

};

可以看到,其包含了前面virtqueue、device status field和feature bits等相关信息。具体的,其每个字段含义如下所示

- device_feature_select字段被驱动用来选择读取设备哪些feature bits。例如值0表示读取低32位的feature bits,值1表示读取高32位的feature bits

- device_feature字段则是驱动通过device_feature_select选择的设备的对应feature bits

- driver_feature_select字段类似device_feature_select字段,被驱动用来选择想写入设备的feature bits范围。值0表示写入低32位的feature bits,值1表示写入高32位的feature bits

- driver_feature字段则是驱动通过driver_feature_select选择的写入设备的对应feature bits

- config_msix_vector字段用来设置MSI-X的Configuration Vector

- num_queues字段表示设备支持的virtqueues最大数量

- device_status字段用来设置device status

- config_generation字段会被设备每次更改设置后变化

- queue_select字段用来表示后续queue_字段所设置的virtqueue序号

- queue_size字段用来表示queue_select指定的virtqueue的大小

- queue_msix_vector字段用来指定queue_select指定的virtqueue的MSI-X向量

- queue_enable字段用来指定queue_select指定的virtqueue是否被启用

- queue_notify_off字段用来计算queue_select指定的virtqueue的notification在VIRTIO_PCI_CAP_NOTIFY_CFG配置空间的偏移

- queue_desc字段用来指定queue_select指定的virtqueue的descriptor table的物理地址

- queue_driver字段用来指定queue_select指定的virtqueue的available ring的物理地址

- queue_device字段用来指定queue_select指定的virtqueue的used ring的物理地址

- queue_reset字段用来指定queue_select指定的virtqueue是否需要被重置

可以看到,基本包含了之前接介绍的virtio协议组件的设置内容

virtio设备

这里我们以virtio-net-pci为例,分析一下Qemu中的virtio协议

virtio-pci类型的设备并没有静态的TypeInfo变量,其是通过virtio_pci_types_register()动态生成并注册对应的TypeInfo。virtio-net-pci就是让virtio_pci_types_register()基于virtio_net_pci_info生成对应的TypeInfo变量并注册,如下所示1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63static const VirtioPCIDeviceTypeInfo virtio_net_pci_info = {

.base_name = TYPE_VIRTIO_NET_PCI,

.generic_name = "virtio-net-pci",

.transitional_name = "virtio-net-pci-transitional",

.non_transitional_name = "virtio-net-pci-non-transitional",

.instance_size = sizeof(VirtIONetPCI),

.instance_init = virtio_net_pci_instance_init,

.class_init = virtio_net_pci_class_init,

};

static void virtio_net_pci_register(void)

{

virtio_pci_types_register(&virtio_net_pci_info);

}

void virtio_pci_types_register(const VirtioPCIDeviceTypeInfo *t)

{

char *base_name = NULL;

TypeInfo base_type_info = {

.name = t->base_name,

.parent = t->parent ? t->parent : TYPE_VIRTIO_PCI,

.instance_size = t->instance_size,

.instance_init = t->instance_init,

.instance_finalize = t->instance_finalize,

.class_size = t->class_size,

.abstract = true,

.interfaces = t->interfaces,

};

TypeInfo generic_type_info = {

.name = t->generic_name,

.parent = base_type_info.name,

.class_init = virtio_pci_generic_class_init,

.interfaces = (InterfaceInfo[]) {

{ INTERFACE_PCIE_DEVICE },

{ INTERFACE_CONVENTIONAL_PCI_DEVICE },

{ }

},

};

if (!base_type_info.name) {

/* No base type -> register a single generic device type */

/* use intermediate %s-base-type to add generic device props */

base_name = g_strdup_printf("%s-base-type", t->generic_name);

base_type_info.name = base_name;

base_type_info.class_init = virtio_pci_generic_class_init;

generic_type_info.parent = base_name;

generic_type_info.class_init = virtio_pci_base_class_init;

generic_type_info.class_data = (void *)t;

assert(!t->non_transitional_name);

assert(!t->transitional_name);

} else {

base_type_info.class_init = virtio_pci_base_class_init;

base_type_info.class_data = (void *)t;

}

type_register(&base_type_info);

if (generic_type_info.name) {

type_register(&generic_type_info);

}

...

}

实际最后会生成如下的TypeInfo1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20pwndbg> frame

#0 virtio_pci_types_register (t=0x555556ed8840 <virtio_net_pci_info>) at ../../qemu/hw/virtio/virtio-pci.c:2616

2616 if (t->non_transitional_name) {

pwndbg> p generic_type_info

$1 = {

name = 0x5555562ddbf5 "virtio-net-pci",

parent = 0x5555562ddbb6 "virtio-net-pci-base",

instance_size = 0,

instance_align = 0,

instance_init = 0x0,

instance_post_init = 0x0,

instance_finalize = 0x0,

abstract = false,

class_size = 0,

class_init = 0x555555b74f0b <virtio_pci_generic_class_init>,

class_base_init = 0x0,

class_data = 0x0,

interfaces = 0x7fffffffd700

}

初始化

要想分析virtio设备的初始化过程,需要罗列相关的TypeInfo变量,如下所示1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84pwndbg> p generic_type_info

$4 = {

name = 0x5555562ddbf5 "virtio-net-pci",

parent = 0x5555562ddbb6 "virtio-net-pci-base",

instance_size = 0,

instance_align = 0,

instance_init = 0x0,

instance_post_init = 0x0,

instance_finalize = 0x0,

abstract = false,

class_size = 0,

class_init = 0x555555b74f0b <virtio_pci_generic_class_init>,

class_base_init = 0x0,

class_data = 0x0,

interfaces = 0x7fffffffd700

}

pwndbg> p base_type_info

$5 = {

name = 0x5555562ddbb6 "virtio-net-pci-base",

parent = 0x55555626674d "virtio-pci",

instance_size = 43376,

instance_align = 0,

instance_init = 0x555555e0fa2b <virtio_net_pci_instance_init>,

instance_post_init = 0x0,

instance_finalize = 0x0,

abstract = true,

class_size = 0,

class_init = 0x555555b74ec1 <virtio_pci_base_class_init>,

class_base_init = 0x0,

class_data = 0x555556ed8840 <virtio_net_pci_info>,

interfaces = 0x0

}

pwndbg> p virtio_pci_info

$6 = {

name = 0x55555626674d "virtio-pci",

parent = 0x5555562666ba "pci-device",

instance_size = 34032,

instance_align = 0,

instance_init = 0x0,

instance_post_init = 0x0,

instance_finalize = 0x0,

abstract = true,

class_size = 248,

class_init = 0x555555b74dd1 <virtio_pci_class_init>,

class_base_init = 0x0,

class_data = 0x0,

interfaces = 0x0

}

pwndbg> p pci_device_type_info

$7 = {

name = 0x55555623a47a "pci-device",

parent = 0x55555623a35d "device",

instance_size = 2608,

instance_align = 0,

instance_init = 0x0,

instance_post_init = 0x0,

instance_finalize = 0x0,

abstract = true,

class_size = 232,

class_init = 0x555555a9c002 <pci_device_class_init>,

class_base_init = 0x555555a9c07d <pci_device_class_base_init>,

class_data = 0x0,

interfaces = 0x0

}

pwndbg> p device_type_info

$8 = {

name = 0x5555562f9c0d "device",

parent = 0x5555562f9f27 "object",

instance_size = 160,

instance_align = 0,

instance_init = 0x555555e9ca7f <device_initfn>,

instance_post_init = 0x555555e9caf9 <device_post_init>,

instance_finalize = 0x555555e9cb30 <device_finalize>,

abstract = true,

class_size = 176,

class_init = 0x555555e9cf54 <device_class_init>,

class_base_init = 0x555555e9cd10 <device_class_base_init>,

class_data = 0x0,

interfaces = 0x5555570085a0 <__compound_literal.0>

}

可以看到,virtio_pci_info的class_size字段非0,因此virtio设备使用struct VirtioPCIClass表征其类信息;base_type_info的instance_size字段非0,根据前面virtio设备小节的内容,因此virtio设备使用struct VirtIONetPCI表征其对象信息。

类初始化

根据前面小节的内容,virtio设备使用virtio_pci_generic_class_init()、virtio_pci_base_class_init()、virtio_pci_class_init()、pci_device_class_init()和device_class_init()分别初始化对应的类数据结构,如下所示1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89static void virtio_pci_generic_class_init(ObjectClass *klass, void *data)

{

DeviceClass *dc = DEVICE_CLASS(klass);

device_class_set_props(dc, virtio_pci_generic_properties);

}

static void virtio_pci_base_class_init(ObjectClass *klass, void *data)

{

const VirtioPCIDeviceTypeInfo *t = data;

if (t->class_init) {

t->class_init(klass, NULL);

}

}

static void virtio_pci_class_init(ObjectClass *klass, void *data)

{

DeviceClass *dc = DEVICE_CLASS(klass);

PCIDeviceClass *k = PCI_DEVICE_CLASS(klass);

VirtioPCIClass *vpciklass = VIRTIO_PCI_CLASS(klass);

ResettableClass *rc = RESETTABLE_CLASS(klass);

device_class_set_props(dc, virtio_pci_properties);

k->realize = virtio_pci_realize;

k->exit = virtio_pci_exit;

k->vendor_id = PCI_VENDOR_ID_REDHAT_QUMRANET;

k->revision = VIRTIO_PCI_ABI_VERSION;

k->class_id = PCI_CLASS_OTHERS;

device_class_set_parent_realize(dc, virtio_pci_dc_realize,

&vpciklass->parent_dc_realize);

rc->phases.hold = virtio_pci_bus_reset_hold;

}

static void pci_device_class_init(ObjectClass *klass, void *data)

{

DeviceClass *k = DEVICE_CLASS(klass);

k->realize = pci_qdev_realize;

k->unrealize = pci_qdev_unrealize;

k->bus_type = TYPE_PCI_BUS;

device_class_set_props(k, pci_props);

}

static void device_class_init(ObjectClass *class, void *data)

{

DeviceClass *dc = DEVICE_CLASS(class);

VMStateIfClass *vc = VMSTATE_IF_CLASS(class);

ResettableClass *rc = RESETTABLE_CLASS(class);

class->unparent = device_unparent;

/* by default all devices were considered as hotpluggable,

* so with intent to check it in generic qdev_unplug() /

* device_set_realized() functions make every device

* hotpluggable. Devices that shouldn't be hotpluggable,

* should override it in their class_init()

*/

dc->hotpluggable = true;

dc->user_creatable = true;

vc->get_id = device_vmstate_if_get_id;

rc->get_state = device_get_reset_state;

rc->child_foreach = device_reset_child_foreach;

/*

* @device_phases_reset is put as the default reset method below, allowing

* to do the multi-phase transition from base classes to leaf classes. It

* allows a legacy-reset Device class to extend a multi-phases-reset

* Device class for the following reason:

* + If a base class B has been moved to multi-phase, then it does not

* override this default reset method and may have defined phase methods.

* + A child class C (extending class B) which uses

* device_class_set_parent_reset() (or similar means) to override the

* reset method will still work as expected. @device_phases_reset function

* will be registered as the parent reset method and effectively call

* parent reset phases.

*/

dc->reset = device_phases_reset;

rc->get_transitional_function = device_get_transitional_reset;

object_class_property_add_bool(class, "realized",

device_get_realized, device_set_realized);

object_class_property_add_bool(class, "hotpluggable",

device_get_hotpluggable, NULL);

object_class_property_add_bool(class, "hotplugged",

device_get_hotplugged, NULL);

object_class_property_add_link(class, "parent_bus", TYPE_BUS,

offsetof(DeviceState, parent_bus), NULL, 0);

}

其中,根据前面初始化的内容,virtio_pci_base_class_init()的参数是virtio_net_pci_info,其class_init字段为virtio_net_pci_class_init(),如下所示1

2

3

4

5

6

7

8

9

10

11

12

13

14

15static void virtio_net_pci_class_init(ObjectClass *klass, void *data)

{

DeviceClass *dc = DEVICE_CLASS(klass);

PCIDeviceClass *k = PCI_DEVICE_CLASS(klass);

VirtioPCIClass *vpciklass = VIRTIO_PCI_CLASS(klass);

k->romfile = "efi-virtio.rom";

k->vendor_id = PCI_VENDOR_ID_REDHAT_QUMRANET;

k->device_id = PCI_DEVICE_ID_VIRTIO_NET;

k->revision = VIRTIO_PCI_ABI_VERSION;

k->class_id = PCI_CLASS_NETWORK_ETHERNET;

set_bit(DEVICE_CATEGORY_NETWORK, dc->categories);

device_class_set_props(dc, virtio_net_properties);

vpciklass->realize = virtio_net_pci_realize;

}

可以看到,这些类的初始化基本就是覆盖父类的realize函数指针或当前类的parent_dc_realize函数指针,从而在实例化时执行相关的逻辑

对象初始化

virtio设备使用virtio_net_pci_instance_init()和device_initfn()来初始化对应的对象数据结构,如下所示1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65//#0 virtio_net_pci_instance_init (obj=0x5555580bdd70) at ../../qemu/hw/virtio/virtio-net-pci.c:85

//#1 0x0000555555ea793a in object_init_with_type (obj=0x5555580bdd70, ti=0x5555570e88f0) at ../../qemu/qom/object.c:429

//#2 0x0000555555ea791c in object_init_with_type (obj=0x5555580bdd70, ti=0x5555570e8ab0) at ../../qemu/qom/object.c:425

//#3 0x0000555555ea7f00 in object_initialize_with_type (obj=0x5555580bdd70, size=43376, type=0x5555570e8ab0) at ../../qemu/qom/object.c:571

//#4 0x0000555555ea86cf in object_new_with_type (type=0x5555570e8ab0) at ../../qemu/qom/object.c:791

//#5 0x0000555555ea873b in object_new (typename=0x5555580bbfd0 "virtio-net-pci") at ../../qemu/qom/object.c:806

//#6 0x0000555555e9ff6a in qdev_new (name=0x5555580bbfd0 "virtio-net-pci") at ../../qemu/hw/core/qdev.c:166

//#7 0x0000555555bd01c4 in qdev_device_add_from_qdict (opts=0x5555580bc3b0, from_json=false, errp=0x7fffffffd6a0) at ../../qemu/system/qdev-monitor.c:681

//#8 0x0000555555bd03d9 in qdev_device_add (opts=0x5555570f7230, errp=0x55555706a160 <error_fatal>) at ../../qemu/system/qdev-monitor.c:737

//#9 0x0000555555bda4e7 in device_init_func (opaque=0x0, opts=0x5555570f7230, errp=0x55555706a160 <error_fatal>) at ../../qemu/system/vl.c:1200

//#10 0x00005555560c2a63 in qemu_opts_foreach (list=0x555556f53ec0 <qemu_device_opts>, func=0x555555bda4bc <device_init_func>, opaque=0x0, errp=0x55555706a160 <error_fatal>) at ../../qemu/util/qemu-option.c:1135

//#11 0x0000555555bde1b8 in qemu_create_cli_devices () at ../../qemu/system/vl.c:2637

//#12 0x0000555555bde3fe in qmp_x_exit_preconfig (errp=0x55555706a160 <error_fatal>) at ../../qemu/system/vl.c:2706

//#13 0x0000555555be0db6 in qemu_init (argc=39, argv=0x7fffffffdae8) at ../../qemu/system/vl.c:3739

//#14 0x0000555555e9b7ed in main (argc=39, argv=0x7fffffffdae8) at ../../qemu/system/main.c:47

//#15 0x00007ffff7629d90 in __libc_start_call_main (main=main@entry=0x555555e9b7c9 <main>, argc=argc@entry=39, argv=argv@entry=0x7fffffffdae8) at ../sysdeps/nptl/libc_start_call_main.h:58

//#16 0x00007ffff7629e40 in __libc_start_main_impl (main=0x555555e9b7c9 <main>, argc=39, argv=0x7fffffffdae8, init=<optimized out>, fini=<optimized out>, rtld_fini=<optimized out>, stack_end=0x7fffffffdad8) at ../csu/libc-start.c:392

//#17 0x000055555586f0d5 in _start ()

static void virtio_net_pci_instance_init(Object *obj)

{

VirtIONetPCI *dev = VIRTIO_NET_PCI(obj);

virtio_instance_init_common(obj, &dev->vdev, sizeof(dev->vdev),

TYPE_VIRTIO_NET);

object_property_add_alias(obj, "bootindex", OBJECT(&dev->vdev),

"bootindex");

}

//#0 device_initfn (obj=0x5555580bdd70) at ../../qemu/hw/core/qdev.c:654

//#1 0x0000555555ea793a in object_init_with_type (obj=0x5555580bdd70, ti=0x5555570ec4a0) at ../../qemu/qom/object.c:429

//#2 0x0000555555ea791c in object_init_with_type (obj=0x5555580bdd70, ti=0x5555570a7830) at ../../qemu/qom/object.c:425

//#3 0x0000555555ea791c in object_init_with_type (obj=0x5555580bdd70, ti=0x5555570b5a80) at ../../qemu/qom/object.c:425

//#4 0x0000555555ea791c in object_init_with_type (obj=0x5555580bdd70, ti=0x5555570e88f0) at ../../qemu/qom/object.c:425

//#5 0x0000555555ea791c in object_init_with_type (obj=0x5555580bdd70, ti=0x5555570e8ab0) at ../../qemu/qom/object.c:425

//#6 0x0000555555ea7f00 in object_initialize_with_type (obj=0x5555580bdd70, size=43376, type=0x5555570e8ab0) at ../../qemu/qom/object.c:571

//#7 0x0000555555ea86cf in object_new_with_type (type=0x5555570e8ab0) at ../../qemu/qom/object.c:791

//#8 0x0000555555ea873b in object_new (typename=0x5555580bbfd0 "virtio-net-pci") at ../../qemu/qom/object.c:806

//#9 0x0000555555e9ff6a in qdev_new (name=0x5555580bbfd0 "virtio-net-pci") at ../../qemu/hw/core/qdev.c:166

//#10 0x0000555555bd01c4 in qdev_device_add_from_qdict (opts=0x5555580bc3b0, from_json=false, errp=0x7fffffffd690) at ../../qemu/system/qdev-monitor.c:681

//#11 0x0000555555bd03d9 in qdev_device_add (opts=0x5555570f7230, errp=0x55555706a160 <error_fatal>) at ../../qemu/system/qdev-monitor.c:737

//#12 0x0000555555bda4e7 in device_init_func (opaque=0x0, opts=0x5555570f7230, errp=0x55555706a160 <error_fatal>) at ../../qemu/system/vl.c:1200

//#13 0x00005555560c2a63 in qemu_opts_foreach (list=0x555556f53ec0 <qemu_device_opts>, func=0x555555bda4bc <device_init_func>, opaque=0x0, errp=0x55555706a160 <error_fatal>) at ../../qemu/util/qemu-option.c:1135

//#14 0x0000555555bde1b8 in qemu_create_cli_devices () at ../../qemu/system/vl.c:2637

//#15 0x0000555555bde3fe in qmp_x_exit_preconfig (errp=0x55555706a160 <error_fatal>) at ../../qemu/system/vl.c:2706

//#16 0x0000555555be0db6 in qemu_init (argc=39, argv=0x7fffffffdad8) at ../../qemu/system/vl.c:3739

//#17 0x0000555555e9b7ed in main (argc=39, argv=0x7fffffffdad8) at ../../qemu/system/main.c:47

//#18 0x00007ffff7629d90 in __libc_start_call_main (main=main@entry=0x555555e9b7c9 <main>, argc=argc@entry=39, argv=argv@entry=0x7fffffffdad8) at ../sysdeps/nptl/libc_start_call_main.h:58

//#19 0x00007ffff7629e40 in __libc_start_main_impl (main=0x555555e9b7c9 <main>, argc=39, argv=0x7fffffffdad8, init=<optimized out>, fini=<optimized out>, rtld_fini=<optimized out>, stack_end=0x7fffffffdac8) at ../csu/libc-start.c:392

//#20 0x000055555586f0d5 in _start ()

static void device_initfn(Object *obj)

{

DeviceState *dev = DEVICE(obj);

if (phase_check(PHASE_MACHINE_READY)) {

dev->hotplugged = 1;

qdev_hot_added = true;

}

dev->instance_id_alias = -1;

dev->realized = false;

dev->allow_unplug_during_migration = false;

QLIST_INIT(&dev->gpios);

QLIST_INIT(&dev->clocks);

}

这里仅仅是初始化了必要的字段。

实例化

根据前面类初始化的内容,virtio设备将其父类数据结构的realize函数指针依次设置为了virtio_pci_dc_realize()、virtio_pci_realize()和virtio_net_pci_realize(),而virtio-pci类的parent_dc_realize字段为pci_qdev_realize()1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323//#0 virtio_net_pci_realize (vpci_dev=0x5555580a4fa0, errp=0x7fffffffd2f0) at ../../qemu/hw/virtio/virtio-net-pci.c:51

//#1 0x0000555555b749a9 in virtio_pci_realize (pci_dev=0x5555580a4fa0, errp=0x7fffffffd2f0) at ../../qemu/hw/virtio/virtio-pci.c:2407

//#2 0x0000555555a9a921 in pci_qdev_realize (qdev=0x5555580a4fa0, errp=0x7fffffffd3b0) at ../../qemu/hw/pci/pci.c:2093

//#3 0x0000555555b74dc4 in virtio_pci_dc_realize (qdev=0x5555580a4fa0, errp=0x7fffffffd3b0) at ../../qemu/hw/virtio/virtio-pci.c:2501

//#4 0x0000555555e9c4f4 in device_set_realized (obj=0x5555580a4fa0, value=true, errp=0x7fffffffd620) at ../../qemu/hw/core/qdev.c:510

//#5 0x0000555555ea7cfb in property_set_bool (obj=0x5555580a4fa0, v=0x5555580b51a0, name=0x5555562f9dd1 "realized", opaque=0x5555570f4510, errp=0x7fffffffd620) at ../../qemu/qom/object.c:2358

//#6 0x0000555555ea5891 in object_property_set (obj=0x5555580a4fa0, name=0x5555562f9dd1 "realized", v=0x5555580b51a0, errp=0x7fffffffd620) at ../../qemu/qom/object.c:1472

//#7 0x0000555555eaa4ca in object_property_set_qobject (obj=0x5555580a4fa0, name=0x5555562f9dd1 "realized", value=0x5555580b3d60, errp=0x7fffffffd620) at ../../qemu/qom/qom-qobject.c:28

//#8 0x0000555555ea5c4a in object_property_set_bool (obj=0x5555580a4fa0, name=0x5555562f9dd1 "realized", value=true, errp=0x7fffffffd620) at ../../qemu/qom/object.c:1541

//#9 0x0000555555e9bc0e in qdev_realize (dev=0x5555580a4fa0, bus=0x555557415420, errp=0x7fffffffd620) at ../../qemu/hw/core/qdev.c:292

//#10 0x0000555555bcdee9 in qdev_device_add_from_qdict (opts=0x5555580a31b0, from_json=false, errp=0x7fffffffd620) at ../../qemu/system/qdev-monitor.c:718

//#11 0x0000555555bcdf99 in qdev_device_add (opts=0x5555570ef1c0, errp=0x555557061f60 <error_fatal>) at ../../qemu/system/qdev-monitor.c:737

//#12 0x0000555555bd80a7 in device_init_func (opaque=0x0, opts=0x5555570ef1c0, errp=0x555557061f60 <error_fatal>) at ../../qemu/system/vl.c:1200

//#13 0x00005555560be1e2 in qemu_opts_foreach (list=0x555556f4bec0 <qemu_device_opts>, func=0x555555bd807c <device_init_func>, opaque=0x0, errp=0x555557061f60 <error_fatal>) at ../../qemu/util/qemu-option.c:1135

//#14 0x0000555555bdbd46 in qemu_create_cli_devices () at ../../qemu/system/vl.c:2637

//#15 0x0000555555bdbf8c in qmp_x_exit_preconfig (errp=0x555557061f60 <error_fatal>) at ../../qemu/system/vl.c:2706

//#16 0x0000555555bde944 in qemu_init (argc=35, argv=0x7fffffffda68) at ../../qemu/system/vl.c:3739

//#17 0x0000555555e96f93 in main (argc=35, argv=0x7fffffffda68) at ../../qemu/system/main.c:47

//#18 0x00007ffff7829d90 in __libc_start_call_main (main=main@entry=0x555555e96f6f <main>, argc=argc@entry=35, argv=argv@entry=0x7fffffffda68) at ../sysdeps/nptl/libc_start_call_main.h:58

//#19 0x00007ffff7829e40 in __libc_start_main_impl (main=0x555555e96f6f <main>, argc=35, argv=0x7fffffffda68, init=<optimized out>, fini=<optimized out>, rtld_fini=<optimized out>, stack_end=0x7fffffffda58) at ../csu/libc-start.c:392

//#20 0x000055555586cc95 in _start ()

static void virtio_net_pci_realize(VirtIOPCIProxy *vpci_dev, Error **errp)

{

DeviceState *qdev = DEVICE(vpci_dev);

VirtIONetPCI *dev = VIRTIO_NET_PCI(vpci_dev);

DeviceState *vdev = DEVICE(&dev->vdev);

VirtIONet *net = VIRTIO_NET(vdev);

if (vpci_dev->nvectors == DEV_NVECTORS_UNSPECIFIED) {

vpci_dev->nvectors = 2 * MAX(net->nic_conf.peers.queues, 1)

+ 1 /* Config interrupt */

+ 1 /* Control vq */;

}

virtio_net_set_netclient_name(&dev->vdev, qdev->id,

object_get_typename(OBJECT(qdev)));

qdev_realize(vdev, BUS(&vpci_dev->bus), errp);

}

static void virtio_pci_realize(PCIDevice *pci_dev, Error **errp)

{

VirtIOPCIProxy *proxy = VIRTIO_PCI(pci_dev);

VirtioPCIClass *k = VIRTIO_PCI_GET_CLASS(pci_dev);

bool pcie_port = pci_bus_is_express(pci_get_bus(pci_dev)) &&

!pci_bus_is_root(pci_get_bus(pci_dev));

/* fd-based ioevents can't be synchronized in record/replay */

if (replay_mode != REPLAY_MODE_NONE) {

proxy->flags &= ~VIRTIO_PCI_FLAG_USE_IOEVENTFD;

}

/*

* virtio pci bar layout used by default.

* subclasses can re-arrange things if needed.

*

* region 0 -- virtio legacy io bar

* region 1 -- msi-x bar

* region 2 -- virtio modern io bar (off by default)

* region 4+5 -- virtio modern memory (64bit) bar

*

*/

proxy->legacy_io_bar_idx = 0;

proxy->msix_bar_idx = 1;

proxy->modern_io_bar_idx = 2;

proxy->modern_mem_bar_idx = 4;

proxy->common.offset = 0x0;

proxy->common.size = 0x1000;

proxy->common.type = VIRTIO_PCI_CAP_COMMON_CFG;

proxy->isr.offset = 0x1000;

proxy->isr.size = 0x1000;

proxy->isr.type = VIRTIO_PCI_CAP_ISR_CFG;

proxy->device.offset = 0x2000;

proxy->device.size = 0x1000;

proxy->device.type = VIRTIO_PCI_CAP_DEVICE_CFG;

proxy->notify.offset = 0x3000;

proxy->notify.size = virtio_pci_queue_mem_mult(proxy) * VIRTIO_QUEUE_MAX;

proxy->notify.type = VIRTIO_PCI_CAP_NOTIFY_CFG;

proxy->notify_pio.offset = 0x0;

proxy->notify_pio.size = 0x4;

proxy->notify_pio.type = VIRTIO_PCI_CAP_NOTIFY_CFG;

/* subclasses can enforce modern, so do this unconditionally */

if (!(proxy->flags & VIRTIO_PCI_FLAG_VDPA)) {

memory_region_init(&proxy->modern_bar, OBJECT(proxy), "virtio-pci",

/* PCI BAR regions must be powers of 2 */

pow2ceil(proxy->notify.offset + proxy->notify.size));

} else {

proxy->lm.offset = proxy->notify.offset + proxy->notify.size;

proxy->lm.size = 0x20 + VIRTIO_QUEUE_MAX * 4;

memory_region_init(&proxy->modern_bar, OBJECT(proxy), "virtio-pci",

/* PCI BAR regions must be powers of 2 */

pow2ceil(proxy->lm.offset + proxy->lm.size));

}

if (proxy->disable_legacy == ON_OFF_AUTO_AUTO) {

proxy->disable_legacy = pcie_port ? ON_OFF_AUTO_ON : ON_OFF_AUTO_OFF;

}

if (!virtio_pci_modern(proxy) && !virtio_pci_legacy(proxy)) {

error_setg(errp, "device cannot work as neither modern nor legacy mode"

" is enabled");

error_append_hint(errp, "Set either disable-modern or disable-legacy"

" to off\n");

return;

}

if (pcie_port && pci_is_express(pci_dev)) {

int pos;

uint16_t last_pcie_cap_offset = PCI_CONFIG_SPACE_SIZE;

pos = pcie_endpoint_cap_init(pci_dev, 0);

assert(pos > 0);

pos = pci_add_capability(pci_dev, PCI_CAP_ID_PM, 0,

PCI_PM_SIZEOF, errp);

if (pos < 0) {

return;

}

pci_dev->exp.pm_cap = pos;

/*

* Indicates that this function complies with revision 1.2 of the

* PCI Power Management Interface Specification.

*/

pci_set_word(pci_dev->config + pos + PCI_PM_PMC, 0x3);

if (proxy->flags & VIRTIO_PCI_FLAG_AER) {

pcie_aer_init(pci_dev, PCI_ERR_VER, last_pcie_cap_offset,

PCI_ERR_SIZEOF, NULL);

last_pcie_cap_offset += PCI_ERR_SIZEOF;

}

if (proxy->flags & VIRTIO_PCI_FLAG_INIT_DEVERR) {

/* Init error enabling flags */

pcie_cap_deverr_init(pci_dev);

}

if (proxy->flags & VIRTIO_PCI_FLAG_INIT_LNKCTL) {

/* Init Link Control Register */

pcie_cap_lnkctl_init(pci_dev);

}

if (proxy->flags & VIRTIO_PCI_FLAG_INIT_PM) {

/* Init Power Management Control Register */

pci_set_word(pci_dev->wmask + pos + PCI_PM_CTRL,

PCI_PM_CTRL_STATE_MASK);

}

if (proxy->flags & VIRTIO_PCI_FLAG_ATS) {

pcie_ats_init(pci_dev, last_pcie_cap_offset,

proxy->flags & VIRTIO_PCI_FLAG_ATS_PAGE_ALIGNED);

last_pcie_cap_offset += PCI_EXT_CAP_ATS_SIZEOF;

}

if (proxy->flags & VIRTIO_PCI_FLAG_INIT_FLR) {

/* Set Function Level Reset capability bit */

pcie_cap_flr_init(pci_dev);

}

} else {

/*

* make future invocations of pci_is_express() return false

* and pci_config_size() return PCI_CONFIG_SPACE_SIZE.

*/

pci_dev->cap_present &= ~QEMU_PCI_CAP_EXPRESS;

}

virtio_pci_bus_new(&proxy->bus, sizeof(proxy->bus), proxy);

if (k->realize) {

k->realize(proxy, errp);

}

}

static void pci_qdev_realize(DeviceState *qdev, Error **errp)

{

PCIDevice *pci_dev = (PCIDevice *)qdev;

PCIDeviceClass *pc = PCI_DEVICE_GET_CLASS(pci_dev);

ObjectClass *klass = OBJECT_CLASS(pc);

Error *local_err = NULL;

bool is_default_rom;

uint16_t class_id;

/*

* capped by systemd (see: udev-builtin-net_id.c)

* as it's the only known user honor it to avoid users

* misconfigure QEMU and then wonder why acpi-index doesn't work

*/

if (pci_dev->acpi_index > ONBOARD_INDEX_MAX) {

error_setg(errp, "acpi-index should be less or equal to %u",

ONBOARD_INDEX_MAX);

return;

}

/*

* make sure that acpi-index is unique across all present PCI devices

*/

if (pci_dev->acpi_index) {

GSequence *used_indexes = pci_acpi_index_list();

if (g_sequence_lookup(used_indexes,

GINT_TO_POINTER(pci_dev->acpi_index),

g_cmp_uint32, NULL)) {

error_setg(errp, "a PCI device with acpi-index = %" PRIu32

" already exist", pci_dev->acpi_index);

return;

}

g_sequence_insert_sorted(used_indexes,

GINT_TO_POINTER(pci_dev->acpi_index),

g_cmp_uint32, NULL);

}

if (pci_dev->romsize != -1 && !is_power_of_2(pci_dev->romsize)) {

error_setg(errp, "ROM size %u is not a power of two", pci_dev->romsize);

return;

}

/* initialize cap_present for pci_is_express() and pci_config_size(),

* Note that hybrid PCIs are not set automatically and need to manage

* QEMU_PCI_CAP_EXPRESS manually */

if (object_class_dynamic_cast(klass, INTERFACE_PCIE_DEVICE) &&

!object_class_dynamic_cast(klass, INTERFACE_CONVENTIONAL_PCI_DEVICE)) {

pci_dev->cap_present |= QEMU_PCI_CAP_EXPRESS;

}

if (object_class_dynamic_cast(klass, INTERFACE_CXL_DEVICE)) {

pci_dev->cap_present |= QEMU_PCIE_CAP_CXL;

}

pci_dev = do_pci_register_device(pci_dev,

object_get_typename(OBJECT(qdev)),

pci_dev->devfn, errp);

if (pci_dev == NULL)

return;

if (pc->realize) {

pc->realize(pci_dev, &local_err);

if (local_err) {

error_propagate(errp, local_err);

do_pci_unregister_device(pci_dev);

return;

}

}

/*

* A PCIe Downstream Port that do not have ARI Forwarding enabled must

* associate only Device 0 with the device attached to the bus

* representing the Link from the Port (PCIe base spec rev 4.0 ver 0.3,

* sec 7.3.1).

* With ARI, PCI_SLOT() can return non-zero value as the traditional

* 5-bit Device Number and 3-bit Function Number fields in its associated

* Routing IDs, Requester IDs and Completer IDs are interpreted as a

* single 8-bit Function Number. Hence, ignore ARI capable devices.

*/

if (pci_is_express(pci_dev) &&

!pcie_find_capability(pci_dev, PCI_EXT_CAP_ID_ARI) &&

pcie_has_upstream_port(pci_dev) &&

PCI_SLOT(pci_dev->devfn)) {

warn_report("PCI: slot %d is not valid for %s,"

" parent device only allows plugging into slot 0.",

PCI_SLOT(pci_dev->devfn), pci_dev->name);

}

if (pci_dev->failover_pair_id) {

if (!pci_bus_is_express(pci_get_bus(pci_dev))) {

error_setg(errp, "failover primary device must be on "

"PCIExpress bus");

pci_qdev_unrealize(DEVICE(pci_dev));

return;

}

class_id = pci_get_word(pci_dev->config + PCI_CLASS_DEVICE);

if (class_id != PCI_CLASS_NETWORK_ETHERNET) {

error_setg(errp, "failover primary device is not an "

"Ethernet device");

pci_qdev_unrealize(DEVICE(pci_dev));

return;

}

if ((pci_dev->cap_present & QEMU_PCI_CAP_MULTIFUNCTION)

|| (PCI_FUNC(pci_dev->devfn) != 0)) {

error_setg(errp, "failover: primary device must be in its own "

"PCI slot");

pci_qdev_unrealize(DEVICE(pci_dev));

return;

}

qdev->allow_unplug_during_migration = true;

}

/* rom loading */

is_default_rom = false;

if (pci_dev->romfile == NULL && pc->romfile != NULL) {

pci_dev->romfile = g_strdup(pc->romfile);

is_default_rom = true;

}

pci_add_option_rom(pci_dev, is_default_rom, &local_err);

if (local_err) {

error_propagate(errp, local_err);

pci_qdev_unrealize(DEVICE(pci_dev));

return;

}

pci_set_power(pci_dev, true);

pci_dev->msi_trigger = pci_msi_trigger;

}

static void virtio_pci_dc_realize(DeviceState *qdev, Error **errp)

{

VirtioPCIClass *vpciklass = VIRTIO_PCI_GET_CLASS(qdev);

VirtIOPCIProxy *proxy = VIRTIO_PCI(qdev);

PCIDevice *pci_dev = &proxy->pci_dev;

if (!(proxy->flags & VIRTIO_PCI_FLAG_DISABLE_PCIE) &&

virtio_pci_modern(proxy)) {

pci_dev->cap_present |= QEMU_PCI_CAP_EXPRESS;

}

vpciklass->parent_dc_realize(qdev, errp);

}

可以看到,在实例化设备时,基于device_set_realized(),不停调用子类在类初始化时覆盖的realize函数指针/parent_dc_realize函数,从而完成最终的实例化。

具体的,由于virtio-net-pci设备属于Virtio Over PCI BUS,因此VirtIONetPCI对象中包含VirtIOPCIProxy对象,即virtio-pci-bus总线的PCIDevice对象,其相关的实例化在virtio_pci_realize(),其分配了VIRTIO_PCI_CAP_COMMON_CFG、VIRTIO_PCI_CAP_ISR_CFG、VIRTIO_PCI_CAP_DEVICE_CFG和io的VIRTIO_PCI_CAP_NOTIFY_CFG等配置空间,并使用virtio_pci_bus_new()初始化VirtIOPCIProxy总线。但此时还未完成与guest驱动的协商,因此此时并不会正常使用,为了与guest驱动进行通信协商,还需要实例化virtio设备相关信息,其在virtio_net_pci_realize()中通过实例化VirtIONet对象实现。该对象的TypeInfo变量是virtio_net_info,如下所示1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17static const TypeInfo virtio_net_info = {

.name = TYPE_VIRTIO_NET,

.parent = TYPE_VIRTIO_DEVICE,

.instance_size = sizeof(VirtIONet),

.instance_init = virtio_net_instance_init,

.class_init = virtio_net_class_init,

};

static const TypeInfo virtio_device_info = {

.name = TYPE_VIRTIO_DEVICE,

.parent = TYPE_DEVICE,

.instance_size = sizeof(VirtIODevice),

.class_init = virtio_device_class_init,

.instance_finalize = virtio_device_instance_finalize,

.abstract = true,

.class_size = sizeof(VirtioDeviceClass),

};

其class_init函数指针分别设置对应的父类realize函数指针为virtio_device_realize()和virtio_net_device_realize(),如下所示1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236//#0 virtio_net_device_realize (dev=0x5555580c6260, errp=0x7fffffffd030) at ../../qemu/hw/net/virtio-net.c:3657

//#1 0x0000555555df39c2 in virtio_device_realize (dev=0x5555580c6260, errp=0x7fffffffd090) at ../../qemu/hw/virtio/virtio.c:3748

//#2 0x0000555555ea0d4e in device_set_realized (obj=0x5555580c6260, value=true, errp=0x7fffffffd360) at ../../qemu/hw/core/qdev.c:510

//#3 0x0000555555eac555 in property_set_bool (obj=0x5555580c6260, v=0x5555580d4190, name=0x555556300c31 "realized", opaque=0x5555570fc6e0, errp=0x7fffffffd360) at ../../qemu/qom/object.c:2358

//#4 0x0000555555eaa0eb in object_property_set (obj=0x5555580c6260, name=0x555556300c31 "realized", v=0x5555580d4190, errp=0x7fffffffd360) at ../../qemu/qom/object.c:1472

//#5 0x0000555555eaed24 in object_property_set_qobject (obj=0x5555580c6260, name=0x555556300c31 "realized", value=0x5555580d40d0, errp=0x7fffffffd360) at ../../qemu/qom/qom-qobject.c:28

//#6 0x0000555555eaa4a4 in object_property_set_bool (obj=0x5555580c6260, name=0x555556300c31 "realized", value=true, errp=0x7fffffffd360) at ../../qemu/qom/object.c:1541

//#7 0x0000555555ea0468 in qdev_realize (dev=0x5555580c6260, bus=0x5555580c61e0, errp=0x7fffffffd360) at ../../qemu/hw/core/qdev.c:292

//#8 0x0000555555e141a8 in virtio_net_pci_realize (vpci_dev=0x5555580bdd70, errp=0x7fffffffd360) at ../../qemu/hw/virtio/virtio-net-pci.c:64

//#9 0x0000555555b76de9 in virtio_pci_realize (pci_dev=0x5555580bdd70, errp=0x7fffffffd360) at ../../qemu/hw/virtio/virtio-pci.c:2407

//#10 0x0000555555a9cd61 in pci_qdev_realize (qdev=0x5555580bdd70, errp=0x7fffffffd420) at ../../qemu/hw/pci/pci.c:2093

//#11 0x0000555555b77204 in virtio_pci_dc_realize (qdev=0x5555580bdd70, errp=0x7fffffffd420) at ../../qemu/hw/virtio/virtio-pci.c:2501

//#12 0x0000555555ea0d4e in device_set_realized (obj=0x5555580bdd70, value=true, errp=0x7fffffffd690) at ../../qemu/hw/core/qdev.c:510

//#13 0x0000555555eac555 in property_set_bool (obj=0x5555580bdd70, v=0x5555580cdea0, name=0x555556300c31 "realized", opaque=0x5555570fc6e0, errp=0x7fffffffd690) at ../../qemu/qom/object.c:2358

//#14 0x0000555555eaa0eb in object_property_set (obj=0x5555580bdd70, name=0x555556300c31 "realized", v=0x5555580cdea0, errp=0x7fffffffd690) at ../../qemu/qom/object.c:1472

//#15 0x0000555555eaed24 in object_property_set_qobject (obj=0x5555580bdd70, name=0x555556300c31 "realized", value=0x5555580cca90, errp=0x7fffffffd690) at ../../qemu/qom/qom-qobject.c:28

//#16 0x0000555555eaa4a4 in object_property_set_bool (obj=0x5555580bdd70, name=0x555556300c31 "realized", value=true, errp=0x7fffffffd690) at ../../qemu/qom/object.c:1541

//#17 0x0000555555ea0468 in qdev_realize (dev=0x5555580bdd70, bus=0x555557430730, errp=0x7fffffffd690) at ../../qemu/hw/core/qdev.c:292

//#18 0x0000555555bd0329 in qdev_device_add_from_qdict (opts=0x5555580bc3b0, from_json=false, errp=0x7fffffffd690) at ../../qemu/system/qdev-monitor.c:718

//#19 0x0000555555bd03d9 in qdev_device_add (opts=0x5555570f7230, errp=0x55555706a160 <error_fatal>) at ../../qemu/system/qdev-monitor.c:737

//#20 0x0000555555bda4e7 in device_init_func (opaque=0x0, opts=0x5555570f7230, errp=0x55555706a160 <error_fatal>) at ../../qemu/system/vl.c:1200

//#21 0x00005555560c2a63 in qemu_opts_foreach (list=0x555556f53ec0 <qemu_device_opts>, func=0x555555bda4bc <device_init_func>, opaque=0x0, errp=0x55555706a160 <error_fatal>) at ../../qemu/util/qemu-option.c:1135

//#22 0x0000555555bde1b8 in qemu_create_cli_devices () at ../../qemu/system/vl.c:2637

//#23 0x0000555555bde3fe in qmp_x_exit_preconfig (errp=0x55555706a160 <error_fatal>) at ../../qemu/system/vl.c:2706

//#24 0x0000555555be0db6 in qemu_init (argc=39, argv=0x7fffffffdad8) at ../../qemu/system/vl.c:3739

//#25 0x0000555555e9b7ed in main (argc=39, argv=0x7fffffffdad8) at ../../qemu/system/main.c:47

//#26 0x00007ffff7629d90 in __libc_start_call_main (main=main@entry=0x555555e9b7c9 <main>, argc=argc@entry=39, argv=argv@entry=0x7fffffffdad8) at ../sysdeps/nptl/libc_start_call_main.h:58

//#27 0x00007ffff7629e40 in __libc_start_main_impl (main=0x555555e9b7c9 <main>, argc=39, argv=0x7fffffffdad8, init=<optimized out>, fini=<optimized out>, rtld_fini=<optimized out>, stack_end=0x7fffffffdac8) at ../csu/libc-start.c:392

//#28 0x000055555586f0d5 in _start ()

static void virtio_net_device_realize(DeviceState *dev, Error **errp)

{

VirtIODevice *vdev = VIRTIO_DEVICE(dev);

VirtIONet *n = VIRTIO_NET(dev);

NetClientState *nc;

int i;

if (n->net_conf.mtu) {

n->host_features |= (1ULL << VIRTIO_NET_F_MTU);

}

if (n->net_conf.duplex_str) {

if (strncmp(n->net_conf.duplex_str, "half", 5) == 0) {

n->net_conf.duplex = DUPLEX_HALF;

} else if (strncmp(n->net_conf.duplex_str, "full", 5) == 0) {

n->net_conf.duplex = DUPLEX_FULL;

} else {

error_setg(errp, "'duplex' must be 'half' or 'full'");

return;

}

n->host_features |= (1ULL << VIRTIO_NET_F_SPEED_DUPLEX);

} else {

n->net_conf.duplex = DUPLEX_UNKNOWN;

}

if (n->net_conf.speed < SPEED_UNKNOWN) {

error_setg(errp, "'speed' must be between 0 and INT_MAX");

return;

}

if (n->net_conf.speed >= 0) {

n->host_features |= (1ULL << VIRTIO_NET_F_SPEED_DUPLEX);

}

if (n->failover) {

n->primary_listener.hide_device = failover_hide_primary_device;

qatomic_set(&n->failover_primary_hidden, true);

device_listener_register(&n->primary_listener);

migration_add_notifier(&n->migration_state,

virtio_net_migration_state_notifier);

n->host_features |= (1ULL << VIRTIO_NET_F_STANDBY);

}

virtio_net_set_config_size(n, n->host_features);

virtio_init(vdev, VIRTIO_ID_NET, n->config_size);

/*

* We set a lower limit on RX queue size to what it always was.

* Guests that want a smaller ring can always resize it without

* help from us (using virtio 1 and up).

*/

if (n->net_conf.rx_queue_size < VIRTIO_NET_RX_QUEUE_MIN_SIZE ||

n->net_conf.rx_queue_size > VIRTQUEUE_MAX_SIZE ||

!is_power_of_2(n->net_conf.rx_queue_size)) {

error_setg(errp, "Invalid rx_queue_size (= %" PRIu16 "), "

"must be a power of 2 between %d and %d.",

n->net_conf.rx_queue_size, VIRTIO_NET_RX_QUEUE_MIN_SIZE,

VIRTQUEUE_MAX_SIZE);

virtio_cleanup(vdev);

return;

}

if (n->net_conf.tx_queue_size < VIRTIO_NET_TX_QUEUE_MIN_SIZE ||

n->net_conf.tx_queue_size > virtio_net_max_tx_queue_size(n) ||

!is_power_of_2(n->net_conf.tx_queue_size)) {

error_setg(errp, "Invalid tx_queue_size (= %" PRIu16 "), "

"must be a power of 2 between %d and %d",

n->net_conf.tx_queue_size, VIRTIO_NET_TX_QUEUE_MIN_SIZE,

virtio_net_max_tx_queue_size(n));

virtio_cleanup(vdev);

return;

}

n->max_ncs = MAX(n->nic_conf.peers.queues, 1);

/*

* Figure out the datapath queue pairs since the backend could

* provide control queue via peers as well.

*/

if (n->nic_conf.peers.queues) {

for (i = 0; i < n->max_ncs; i++) {

if (n->nic_conf.peers.ncs[i]->is_datapath) {

++n->max_queue_pairs;

}

}

}

n->max_queue_pairs = MAX(n->max_queue_pairs, 1);

if (n->max_queue_pairs * 2 + 1 > VIRTIO_QUEUE_MAX) {

error_setg(errp, "Invalid number of queue pairs (= %" PRIu32 "), "

"must be a positive integer less than %d.",

n->max_queue_pairs, (VIRTIO_QUEUE_MAX - 1) / 2);

virtio_cleanup(vdev);

return;

}

n->vqs = g_new0(VirtIONetQueue, n->max_queue_pairs);

n->curr_queue_pairs = 1;

n->tx_timeout = n->net_conf.txtimer;

if (n->net_conf.tx && strcmp(n->net_conf.tx, "timer")

&& strcmp(n->net_conf.tx, "bh")) {

warn_report("virtio-net: "

"Unknown option tx=%s, valid options: \"timer\" \"bh\"",

n->net_conf.tx);

error_printf("Defaulting to \"bh\"");

}

n->net_conf.tx_queue_size = MIN(virtio_net_max_tx_queue_size(n),

n->net_conf.tx_queue_size);

for (i = 0; i < n->max_queue_pairs; i++) {

virtio_net_add_queue(n, i);

}

n->ctrl_vq = virtio_add_queue(vdev, 64, virtio_net_handle_ctrl);

qemu_macaddr_default_if_unset(&n->nic_conf.macaddr);

memcpy(&n->mac[0], &n->nic_conf.macaddr, sizeof(n->mac));

n->status = VIRTIO_NET_S_LINK_UP;

qemu_announce_timer_reset(&n->announce_timer, migrate_announce_params(),

QEMU_CLOCK_VIRTUAL,

virtio_net_announce_timer, n);

n->announce_timer.round = 0;

if (n->netclient_type) {

/*

* Happen when virtio_net_set_netclient_name has been called.

*/

n->nic = qemu_new_nic(&net_virtio_info, &n->nic_conf,

n->netclient_type, n->netclient_name,

&dev->mem_reentrancy_guard, n);

} else {

n->nic = qemu_new_nic(&net_virtio_info, &n->nic_conf,

object_get_typename(OBJECT(dev)), dev->id,

&dev->mem_reentrancy_guard, n);

}

for (i = 0; i < n->max_queue_pairs; i++) {

n->nic->ncs[i].do_not_pad = true;

}

peer_test_vnet_hdr(n);

if (peer_has_vnet_hdr(n)) {

for (i = 0; i < n->max_queue_pairs; i++) {

qemu_using_vnet_hdr(qemu_get_subqueue(n->nic, i)->peer, true);

}

n->host_hdr_len = sizeof(struct virtio_net_hdr);

} else {

n->host_hdr_len = 0;

}

qemu_format_nic_info_str(qemu_get_queue(n->nic), n->nic_conf.macaddr.a);

n->vqs[0].tx_waiting = 0;

n->tx_burst = n->net_conf.txburst;

virtio_net_set_mrg_rx_bufs(n, 0, 0, 0);

n->promisc = 1; /* for compatibility */

n->mac_table.macs = g_malloc0(MAC_TABLE_ENTRIES * ETH_ALEN);

n->vlans = g_malloc0(MAX_VLAN >> 3);

nc = qemu_get_queue(n->nic);

nc->rxfilter_notify_enabled = 1;

if (nc->peer && nc->peer->info->type == NET_CLIENT_DRIVER_VHOST_VDPA) {

struct virtio_net_config netcfg = {};

memcpy(&netcfg.mac, &n->nic_conf.macaddr, ETH_ALEN);

vhost_net_set_config(get_vhost_net(nc->peer),

(uint8_t *)&netcfg, 0, ETH_ALEN, VHOST_SET_CONFIG_TYPE_FRONTEND);

}

QTAILQ_INIT(&n->rsc_chains);

n->qdev = dev;

net_rx_pkt_init(&n->rx_pkt);

if (virtio_has_feature(n->host_features, VIRTIO_NET_F_RSS)) {

virtio_net_load_ebpf(n, errp);

}

}

static void virtio_device_realize(DeviceState *dev, Error **errp)

{

VirtIODevice *vdev = VIRTIO_DEVICE(dev);

VirtioDeviceClass *vdc = VIRTIO_DEVICE_GET_CLASS(dev);

Error *err = NULL;

/* Devices should either use vmsd or the load/save methods */

assert(!vdc->vmsd || !vdc->load);

if (vdc->realize != NULL) {

vdc->realize(dev, &err);

if (err != NULL) {

error_propagate(errp, err);

return;

}

}

virtio_bus_device_plugged(vdev, &err);

if (err != NULL) {

error_propagate(errp, err);

vdc->unrealize(dev);

return;

}

vdev->listener.commit = virtio_memory_listener_commit;

vdev->listener.name = "virtio";

memory_listener_register(&vdev->listener, vdev->dma_as);

}

可以看到,这里实例化了virtio设备具体的组件,诸如virtquues、feature bits等。除此之外,根据qemu的PCI设备,其需要实例化PCI配置空间,是在上述virtio_net_device_realize()中调用virtio_bus_device_plugged()中实现的。该函数会调用virtio设备所在的bus的device_plugged函数指针进行,而前面virtio_pci_realize()将VirtIOPCIProxy对象设置为virtio-pci-bus总线,并在virtio_net_pci_realize()中将virtio设备,即VirtIONet对象的总线类型也设为virtio-pci-bus总线,其在类初始化时将device_plugged函数指针设置为virtio_pci_device_plugged()

1 | //#0 virtio_pci_device_plugged (d=0x5555580bdd70, errp=0x7fffffffcfd8) at ../../qemu/hw/virtio/virtio-pci.c:2090 |

可以看到,在virtio_pci_device_plugged()中完成了PCI配置空间的设置。

整体来看,virtio设备实例化时会分别实例化virtio transport和virtio设备,这样子具有更好的拓展性。

virtio设置

在qemu实例化完virtio-net-pci设备后,需要与guest驱动通信完成virtio的设置,即virtio各个组件的设置

virtio结构的配置空间

根据前面virtio transport章节可知,virtio-net-pci设备PCI配置空间的capability指定着virtio各个组件的配置空间

因此首先就需要设置virtio结构的配置空间,即根据capability确定组件配置空间的BAR。

在前面virtio设备的实例化中,virtio_pci_device_plugged()将所有capability配置空间设置在proxy->modern_bar上。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58/* This is called by virtio-bus just after the device is plugged. */

static void virtio_pci_device_plugged(DeviceState *d, Error **errp)

{

...

struct virtio_pci_cap cap = {

.cap_len = sizeof cap,

};

struct virtio_pci_notify_cap notify = {

.cap.cap_len = sizeof notify,

.notify_off_multiplier =

cpu_to_le32(virtio_pci_queue_mem_mult(proxy)),

};

struct virtio_pci_cfg_cap cfg = {

.cap.cap_len = sizeof cfg,

.cap.cfg_type = VIRTIO_PCI_CAP_PCI_CFG,

};

struct virtio_pci_notify_cap notify_pio = {

.cap.cap_len = sizeof notify,

.notify_off_multiplier = cpu_to_le32(0x0),

};

struct virtio_pci_cfg_cap *cfg_mask;

...

virtio_pci_modern_mem_region_map(proxy, &proxy->common, &cap);

virtio_pci_modern_mem_region_map(proxy, &proxy->isr, &cap);

virtio_pci_modern_mem_region_map(proxy, &proxy->device, &cap);

virtio_pci_modern_mem_region_map(proxy, &proxy->notify, ¬ify.cap);

...

pci_register_bar(&proxy->pci_dev, proxy->modern_mem_bar_idx,

PCI_BASE_ADDRESS_SPACE_MEMORY |

PCI_BASE_ADDRESS_MEM_PREFETCH |

PCI_BASE_ADDRESS_MEM_TYPE_64,

&proxy->modern_bar);

}

static void virtio_pci_modern_mem_region_map(VirtIOPCIProxy *proxy,

VirtIOPCIRegion *region,

struct virtio_pci_cap *cap)

{

virtio_pci_modern_region_map(proxy, region, cap,

&proxy->modern_bar, proxy->modern_mem_bar_idx);

}

static void virtio_pci_modern_region_map(VirtIOPCIProxy *proxy,

VirtIOPCIRegion *region,

struct virtio_pci_cap *cap,

MemoryRegion *mr,

uint8_t bar)

{

memory_region_add_subregion(mr, region->offset, ®ion->mr);

cap->cfg_type = region->type;

cap->bar = bar;

cap->offset = cpu_to_le32(region->offset);

cap->length = cpu_to_le32(region->size);

virtio_pci_add_mem_cap(proxy, cap);

}

而proxy->modern_mem_bar_idx在virtio_pci_realize()中被设置为4,即capability配置空间被设置在BAR4上1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16static void virtio_pci_realize(PCIDevice *pci_dev, Error **errp)

{

...

/*

* virtio pci bar layout used by default.

* subclasses can re-arrange things if needed.

*

* region 0 -- virtio legacy io bar

* region 1 -- msi-x bar

* region 2 -- virtio modern io bar (off by default)

* region 4+5 -- virtio modern memory (64bit) bar

*

*/

proxy->modern_mem_bar_idx = 4;

...

}

根据qemu的PCI设备可知,guest驱动在PCI配置空间中设置BAR4的物理地址即可完成virtio结构的配置空间的设置1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29//#0 pci_default_write_config (d=0x5555580bdd70, addr=32, val_in=4294967295, l=4) at ../../qemu/hw/pci/pci.c:1594

//#1 0x0000555555b729ad in virtio_write_config (pci_dev=0x5555580bdd70, address=32, val=4294967295, len=4) at ../../qemu/hw/virtio/virtio-pci.c:747

//#2 0x0000555555aa0c9a in pci_host_config_write_common (pci_dev=0x5555580bdd70, addr=32, limit=256, val=4294967295, len=4) at ../../qemu/hw/pci/pci_host.c:96

//#3 0x0000555555aa0ee6 in pci_data_write (s=0x555557430730, addr=2147489824, val=4294967295, len=4) at ../../qemu/hw/pci/pci_host.c:138

//#4 0x0000555555aa10bb in pci_host_data_write (opaque=0x5555573f9ad0, addr=0, val=4294967295, len=4) at ../../qemu/hw/pci/pci_host.c:188

//#5 0x0000555555e1e25a in memory_region_write_accessor (mr=0x5555573f9f10, addr=0, value=0x7ffff65ff598, size=4, shift=0, mask=4294967295, attrs=...) at ../../qemu/system/memory.c:497

//#6 0x0000555555e1e593 in access_with_adjusted_size (addr=0, value=0x7ffff65ff598, size=4, access_size_min=1, access_size_max=4, access_fn=0x555555e1e160 <memory_region_write_accessor>, mr=0x5555573f9f10, attrs=...) at ../../qemu/system/memory.c:573

//#7 0x0000555555e218ad in memory_region_dispatch_write (mr=0x5555573f9f10, addr=0, data=4294967295, op=MO_32, attrs=...) at ../../qemu/system/memory.c:1521

//#8 0x0000555555e2fffa in flatview_write_continue_step (attrs=..., buf=0x7ffff7f8a000 "\377\377\377\377", len=4, mr_addr=0, l=0x7ffff65ff680, mr=0x5555573f9f10) at ../../qemu/system/physmem.c:2713

//#9 0x0000555555e300ca in flatview_write_continue (fv=0x7ffee8043b90, addr=3324, attrs=..., ptr=0x7ffff7f8a000, len=4, mr_addr=0, l=4, mr=0x5555573f9f10) at ../../qemu/system/physmem.c:2743

//#10 0x0000555555e301dc in flatview_write (fv=0x7ffee8043b90, addr=3324, attrs=..., buf=0x7ffff7f8a000, len=4) at ../../qemu/system/physmem.c:2774

//#11 0x0000555555e3062a in address_space_write (as=0x555557055e80 <address_space_io>, addr=3324, attrs=..., buf=0x7ffff7f8a000, len=4) at ../../qemu/system/physmem.c:2894

//#12 0x0000555555e306a6 in address_space_rw (as=0x555557055e80 <address_space_io>, addr=3324, attrs=..., buf=0x7ffff7f8a000, len=4, is_write=true) at ../../qemu/system/physmem.c:2904

//#13 0x0000555555e89cd0 in kvm_handle_io (port=3324, attrs=..., data=0x7ffff7f8a000, direction=1, size=4, count=1) at ../../qemu/accel/kvm/kvm-all.c:2631

//#14 0x0000555555e8a640 in kvm_cpu_exec (cpu=0x5555573bc6a0) at ../../qemu/accel/kvm/kvm-all.c:2903

//#15 0x0000555555e8d712 in kvm_vcpu_thread_fn (arg=0x5555573bc6a0) at ../../qemu/accel/kvm/kvm-accel-ops.c:50

//#16 0x00005555560b6f08 in qemu_thread_start (args=0x5555573c5850) at ../../qemu/util/qemu-thread-posix.c:541

//#17 0x00007ffff7694ac3 in start_thread (arg=<optimized out>) at ./nptl/pthread_create.c:442

//#18 0x00007ffff7726850 in clone3 () at ../sysdeps/unix/sysv/linux/x86_64/clone3.S:81

void pci_default_write_config(PCIDevice *d, uint32_t addr, uint32_t val_in, int l)

{

...

if (ranges_overlap(addr, l, PCI_BASE_ADDRESS_0, 24) ||

ranges_overlap(addr, l, PCI_ROM_ADDRESS, 4) ||

ranges_overlap(addr, l, PCI_ROM_ADDRESS1, 4) ||

range_covers_byte(addr, l, PCI_COMMAND))

pci_update_mappings(d);

...

}

virtio组件

根据前面virtio transport章节,virtio组件通过对应的配置空间进行设置。而virtio结构的配置空间在前面virtio结构的配置空间完成初始化,映射入AddressSpace中。 此时guest即可通过读写组件的配置空间完成组件的设置

具体的,在实例化时virtio_pci_device_plugged()为每一个virito结构的配置空间分配了一个单独的MemoryRegion,则guest读写组件的配置空间即可触发对应MemoryRegion的回调函数,完成virtio组件的设置1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110/* This is called by virtio-bus just after the device is plugged. */

static void virtio_pci_device_plugged(DeviceState *d, Error **errp)

{

...

virtio_pci_modern_regions_init(proxy, vdev->name);

...

}

static void virtio_pci_modern_regions_init(VirtIOPCIProxy *proxy,

const char *vdev_name)

{

static const MemoryRegionOps common_ops = {

.read = virtio_pci_common_read,

.write = virtio_pci_common_write,

.impl = {

.min_access_size = 1,

.max_access_size = 4,

},

.endianness = DEVICE_LITTLE_ENDIAN,

};

static const MemoryRegionOps isr_ops = {

.read = virtio_pci_isr_read,

.write = virtio_pci_isr_write,

.impl = {

.min_access_size = 1,

.max_access_size = 4,

},

.endianness = DEVICE_LITTLE_ENDIAN,

};

static const MemoryRegionOps device_ops = {

.read = virtio_pci_device_read,

.write = virtio_pci_device_write,

.impl = {

.min_access_size = 1,

.max_access_size = 4,

},

.endianness = DEVICE_LITTLE_ENDIAN,

};

static const MemoryRegionOps notify_ops = {

.read = virtio_pci_notify_read,

.write = virtio_pci_notify_write,

.impl = {

.min_access_size = 1,

.max_access_size = 4,

},

.endianness = DEVICE_LITTLE_ENDIAN,

};

static const MemoryRegionOps notify_pio_ops = {

.read = virtio_pci_notify_read,

.write = virtio_pci_notify_write_pio,

.impl = {

.min_access_size = 1,

.max_access_size = 4,

},

.endianness = DEVICE_LITTLE_ENDIAN,

};

static const MemoryRegionOps lm_ops = {

.read = virtio_pci_lm_read,

.write = virtio_pci_lm_write,

.impl = {

.min_access_size = 1,

.max_access_size = 4,

},

.endianness = DEVICE_LITTLE_ENDIAN,

};

g_autoptr(GString) name = g_string_new(NULL);

g_string_printf(name, "virtio-pci-common-%s", vdev_name);

memory_region_init_io(&proxy->common.mr, OBJECT(proxy),

&common_ops,

proxy,

name->str,

proxy->common.size);

g_string_printf(name, "virtio-pci-isr-%s", vdev_name);

memory_region_init_io(&proxy->isr.mr, OBJECT(proxy),

&isr_ops,

proxy,

name->str,

proxy->isr.size);

g_string_printf(name, "virtio-pci-device-%s", vdev_name);

memory_region_init_io(&proxy->device.mr, OBJECT(proxy),

&device_ops,

proxy,

name->str,

proxy->device.size);

g_string_printf(name, "virtio-pci-notify-%s", vdev_name);

memory_region_init_io(&proxy->notify.mr, OBJECT(proxy),

¬ify_ops,

proxy,

name->str,

proxy->notify.size);

g_string_printf(name, "virtio-pci-notify-pio-%s", vdev_name);

memory_region_init_io(&proxy->notify_pio.mr, OBJECT(proxy),

¬ify_pio_ops,

proxy,

name->str,

proxy->notify_pio.size);

if (proxy->flags & VIRTIO_PCI_FLAG_VDPA) {

g_string_printf(name, "virtio-pci-lm-%s", vdev_name);

memory_region_init_io(&proxy->lm.mr, OBJECT(proxy),

&lm_ops,

proxy,

name->str,

proxy->lm.size);

}

}

这里重点介绍一下VIRTIO_PCI_CAP_COMMON_CFG设置空间的回调函数,根据前面VIRTIO_PCI_CAP_COMMON_CFG配置空间章节可知,读写其字段可以设置virtqueue和feature bits等组件。根据前面virtio组件章节中代码可知,qemu使用virtio_pci_common_writes()来进行设置的

1 | //#0 virtio_pci_common_write (opaque=0x5555580bdd70, addr=22, val=0, size=2) at ../../qemu/hw/virtio/virtio-pci.c:1689 |

数据处理

实际上数据处理包括数据传输和通知两部分,数据传输是通过内存共享实现的,而通知是通过内核的eventfd机制实现的

数据传输

由于guest物理地址空间位于qemu的进程地址空间中,因此qemu天然就可以访问guest的任意物理地址。

因此,只要知道guest中为virtqueue分配的物理地址空间,virtio设备即可找到这些空间在qemu进程空间中的hva,即可完成访问,这是在virtio_queue_set_rings()中完成的

具体的,根据前面virtio组件小节可知,当guest设置VIRTIO_PCI_COMMON_Q_ENABLE字段来完成virtqueue的设置时,qemu会调用virtio_queue_set_rings(),如下所示1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103void virtio_queue_set_rings(VirtIODevice *vdev, int n, hwaddr desc,

hwaddr avail, hwaddr used)

{

if (!vdev->vq[n].vring.num) {

return;

}

vdev->vq[n].vring.desc = desc;

vdev->vq[n].vring.avail = avail;

vdev->vq[n].vring.used = used;

virtio_init_region_cache(vdev, n);

}

void virtio_init_region_cache(VirtIODevice *vdev, int n)

{

VirtQueue *vq = &vdev->vq[n];

VRingMemoryRegionCaches *old = vq->vring.caches;

VRingMemoryRegionCaches *new = NULL;

hwaddr addr, size;

int64_t len;

bool packed;

...

new = g_new0(VRingMemoryRegionCaches, 1);

size = virtio_queue_get_desc_size(vdev, n);

packed = virtio_vdev_has_feature(vq->vdev, VIRTIO_F_RING_PACKED) ?

true : false;

len = address_space_cache_init(&new->desc, vdev->dma_as,

addr, size, packed);

if (len < size) {

virtio_error(vdev, "Cannot map desc");

goto err_desc;

}

size = virtio_queue_get_used_size(vdev, n);

len = address_space_cache_init(&new->used, vdev->dma_as,

vq->vring.used, size, true);

if (len < size) {

virtio_error(vdev, "Cannot map used");

goto err_used;

}

size = virtio_queue_get_avail_size(vdev, n);

len = address_space_cache_init(&new->avail, vdev->dma_as,

vq->vring.avail, size, false);

if (len < size) {

virtio_error(vdev, "Cannot map avail");

goto err_avail;

}

qatomic_rcu_set(&vq->vring.caches, new);

if (old) {

call_rcu(old, virtio_free_region_cache, rcu);

}

return;

...

}

int64_t address_space_cache_init(MemoryRegionCache *cache,

AddressSpace *as,

hwaddr addr,

hwaddr len,

bool is_write)

{

AddressSpaceDispatch *d;

hwaddr l;

MemoryRegion *mr;

Int128 diff;

assert(len > 0);

l = len;

cache->fv = address_space_get_flatview(as);

d = flatview_to_dispatch(cache->fv);

cache->mrs = *address_space_translate_internal(d, addr, &cache->xlat, &l, true);

/*

* cache->xlat is now relative to cache->mrs.mr, not to the section itself.

* Take that into account to compute how many bytes are there between

* cache->xlat and the end of the section.

*/

diff = int128_sub(cache->mrs.size,

int128_make64(cache->xlat - cache->mrs.offset_within_region));

l = int128_get64(int128_min(diff, int128_make64(l)));

mr = cache->mrs.mr;

memory_region_ref(mr);

if (memory_access_is_direct(mr, is_write)) {

/* We don't care about the memory attributes here as we're only

* doing this if we found actual RAM, which behaves the same

* regardless of attributes; so UNSPECIFIED is fine.

*/

l = flatview_extend_translation(cache->fv, addr, len, mr,

cache->xlat, l, is_write,

MEMTXATTRS_UNSPECIFIED);

cache->ptr = qemu_ram_ptr_length(mr->ram_block, cache->xlat, &l, true);

} else {

cache->ptr = NULL;

}

cache->len = l;

cache->is_write = is_write;

return l;

}

根据qemu内存模型可知,这里直接将virtqueue对应的gpa转换为qemu中的hva存储在VRingMemoryRegionCaches结构中。因此virito设备可通过该结构直接访问virtiqueue中的数据,而guest通过gpa直接访问virtqueue中的数据,从而实现数据传输

数据通知

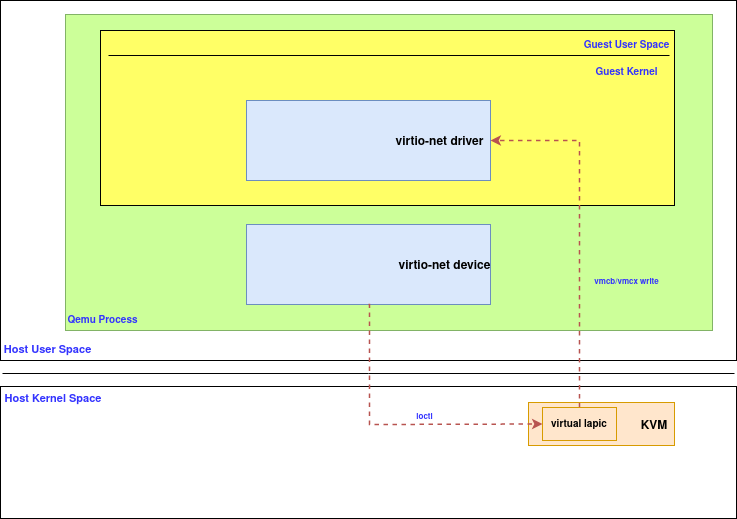

通知包括两部分——guest通知virtio设备(ioeventfd)、virtio设备中断guest(ioctl)。

其中前半部分是由内核的eventfd机制实现

eventfd() creates an “eventfd object” that can be used as an

event wait/notify mechanism by user-space applications, and by

the kernel to notify user-space applications of events.

而后半部分则是由硬件提供的机制实现的,即cpu提供了向guest注入中断的接口,则virtio设备通过ioctl调用该接口即可

通知设备

ioeventfd机制的示意图如下图所示

根据前面virtio组件小节可知,当guest设置VIRTIO_PCI_COMMON_STATUS字段来完成virtio设备的设置时,qemu会调用virtio_pci_start_ioeventfd()设置eventfd,如下所示1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40